M&S Use

Bolded words or phrases are key terms or acronyms defined under References or concepts discussed in NASA-STD-7009 or NASA-HDBK-7009.

The M&S Use Phase (Phase E) is shown here with added detail in the context of the entire M&S Life Cycle

M&S Use, taken as a broad and general phase in the M&S Life Cycle, is more than simply using the M&S. There are some tasks to accomplish before and after the actual use, and so this phase is discussed in three sub-phases.Pre-Use

The needs for overall M&S Use are:

- The Released M&S

- The M&S User’s Guide

- Procedures for M&S Use

- The Infrastructure for M&S Use (M&S H/W & S/W)

Once these items are in hand, each proposed use of the M&S must be evaluated against the permissible uses of the M&S, as determined in M&S development and testing. The following table depicts these similar elements for comparison in a use assessment.

| Permissible Use(s) of Model |

| Type of Use Intended. • Implies the Type of Model. • The application domain (discipline, area of study) of the Model. • The Purpose of the Model. |

| Model’s Abstractions and Assumptions. • Inclusions in the M&S. • Exclusions from the M&S. • Assumptions of M&S form, fit, or function. |

| Limits of Model Parameters, per • Model design (including any computer H/W or S/W limitations). • Verification. • Validation. |

| Types of Outputs (Results) Produced, including: • Accuracy. • Precision. • Uncertainty. |

| Proposed Use(s) of Model |

| Type of Use Needed. • Implied by the type of RWS. • The application area (discipline, area of study) of the subject RWS. • The purpose of proposed model use with respect to the RWS. |

| Inclusions & Fidelity Needed. • Specific expectations of what is in, or expected of, the M&S. • The desired level of accuracy, precision, & uncertainty of the M&S. |

| Desired Domain of Use. • With respect to the RWS. • Parameter values the model is expected to represent. |

| Type of Results Needed, including: • Accuracy. • Precision. • Uncertainty of Results. |

It is also prudent to assess the criticality of the situation upon which the M&S is used. Critical situations imply a greater need for rigor, control, and documentation (evidence).

Note: this is the 2nd time Criticality is mentioned. The first time was at the initiation of model development, to help guide development. While a model may be developed for an initial intended use (critical or not), the use of the model may different to some degree. Therefore, this criticality assessment is for the ‘use at hand.’

The last task prior to actual use of application of the M&S is to determine or define the scenarios and explicit input for model execution. This can take a wide variety of data analysis methods, including design of experiments and stochastic variable modeling.Use

Strictly speaking, the actual ‘use’ of an M&S only consists of setting up the M&S for use and using (or running / executing) it.Post-Use

After running the M&S, the resulting data is analyzed and then assessed and prepared for dissemination in reports or briefings. During data analysis, value is added to the results by also presenting an understanding of the results uncertainty and sensitivity.

The NASA Standard for Models and Simulations also requires the reporting of supplementary information, along with the M&S results, to substantiate the credibility of the M&S results and understand the risks associated with accepting the results of the M&S use.

Reporting M&S Results

There is much more to reporting M&S results than the answers output from its use. The risks potentially incurred during the development and use of the M&S, including the factors influencing the credibility of those results, have implications to the acceptance of those results. Clearly conveying these risk elements and credibility factors, along with the criticality associated with the represented real world system, supports a robust decision-making process. Each of these aspects of M&S results reporting is shown in the following diagram. Select each aspect in the diagram for additional details or refer to the appropriate sections of NASA-STD-7009 or NASA-HDBK-7009. The [M&S x] notations indicate requirement numbers in the NASA Standard.

M&S Criticality is best assessed early in both M&S Development and M&S Use to help inform the needed rigor in M&S processes and products.

Analysis Caveats

Caveats are information included with M&S results to provide cautions or prevent misinterpretation when evaluating, interpreting, or making decisions. This type of information includes:

- Unachieved acceptance criteria

- Violation of assumptions

- Violation of the Limits of Operation

- Execution Warning and Error Messages

- Unfavorable outcomes from the proposed use assessments

- Unfavorable outcomes from Setup/Execution Assessments

- Waivers to Requirements

A placard to the M&S results is warranted if the caveat is significant.

The addition of a placard helps communicate the potential issue associated with the M&S results.

M&S Results Uncertainty

M&S’s are neither perfect nor all-inclusive representations and so will have some uncertainty in the results from their use. Uncertainties describe the imperfect state of knowledge or a variability resulting from a variety of factors, including but not limited to lack of knowledge, applicability of information, physical variation, randomness or stochastic behavior, indeterminacy, judgment, and approximation. Uncertainties take many forms, stem from many sources, and should be addressed when reporting M&S results. Assessing M&S uncertainties starts with understanding the following:

- Sources

- Locations

- Characteristics (Type)

- Magnitude

- How well known

- How they are used (incorporated) in the M&S

- How they propagate through the M&S

For clarity, all M&S results are to be accompanied with one of the following:

- A quantitative estimate of the uncertainty,

- A qualitative description of the uncertainty,

- A clear statement that no quantitative estimate or qualitative description of uncertainty is available.

M&S Credibility

M&S Credibility is the quality to elicit belief or trust in M&S results and cannot be determined directly; however, key factors exist that contribute to a decision-maker’s credibility assessment. The eight factors in NASA’s assessment of M&S Results Credibility were selected from a long list of factors that potentially contribute to the credibility of M&S results because

- they were individually judged to be the key factors in this list

- they are nearly orthogonal, i.e., largely independent, factors

- they can be assessed objectively.

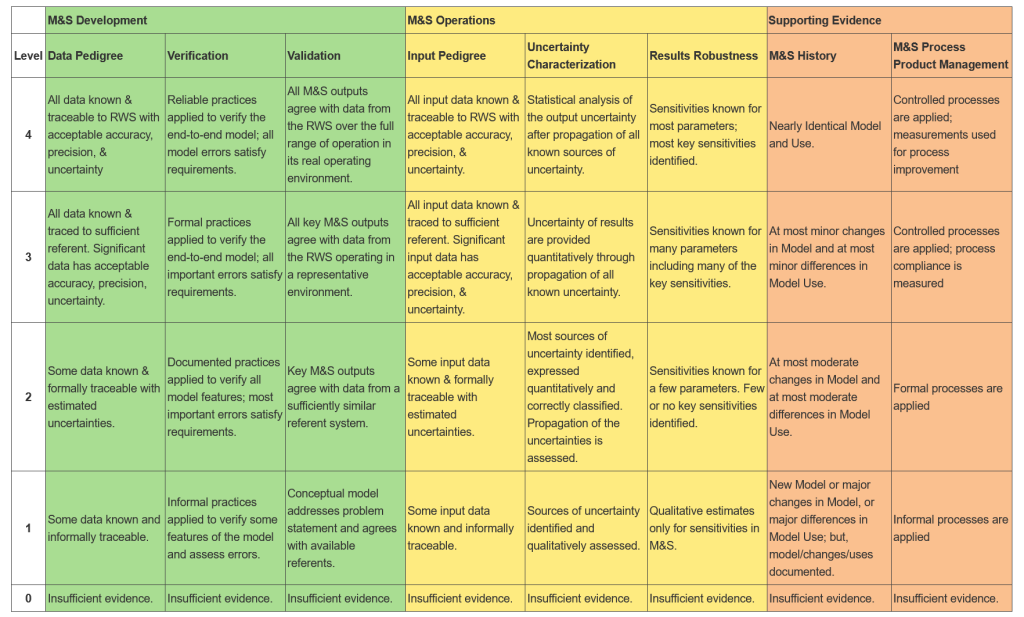

These factors are organized in three groups, as follows:

- M&S Development

- Data Pedigree: Is the pedigree (and quality) of the data used to develop the model adequate or acceptable?

- Verification: Were the models implemented correctly, per their requirements/specifications?

- Validation: Did the M&S results compare favorably to the referent data, and how close is the referent to the RWS?

- M&S Operations

- Input Pedigree: Is the pedigree (and quality) of the data used to setup and run the model adequate or acceptable?

- Uncertainty Characterization: Is the uncertainty in the current M&S results appropriately characterized? What are the sources of uncertainty in the results and how are they propagated through to the results of the analysis?

- Results Robustness: How thoroughly are the sensitivities of the current M&S results known?

- Supporting Evidence

- M&S History: How similar is the current version of the M&S to previous versions, and how similar is the current use of the M&S to previous successful uses?

- M&S Management: How well managed were the M&S processes and products?

The following schema was developed to assess each of the credibility factors:

The results of the credibility assessment may be presented to decision makers in a variety of forms. The following graphical methods are just two possibilities.

Credibility Assessment – Bar Chart

Credibility Assessment – Spider Plot (Radar Chart)

M&S Documentation

Documentation is one of the cornerstones of the NASA Standard for Models and Simulations, with the underlying theme that evidence is required to claim an activity is accomplished or a requirement is satisfied (i.e., if it is not documented, it is not done).

The NASA Handbook for Models and Simulations Table 13 provides a method to synopsize the documentation (evidence) requirements of the Standard. For each of the ‘documentation’ requirements, the following information is requested:

- Do any of the documents exist?

- If yes, what are the documents?

- What is the rationale for proceeding with the reported information?

M&S Technical Review

Technical reviews of M&S development and use processes and products help bolster the acceptability of M&S-based analyses. Knowing the results (or findings) from existing or past technical reviews is, therefore, valuable.

Technical reviews of M&S development and use processes and products are valuable in determining the acceptability of M&S-based analyses. Understanding the information in the following table provides supporting material in ascertaining the risks of accepting the results from an M&S-based analysis.

| 7009 Req’t | Reporting Req’t | Do any of the following exist? (Yes / No) | If yes, what are they? | Rationale for proceeding with the reported information |

| [M&S 36] | Technical Review | |||

| Review | ||||

| – What was reviewed? | ||||

| – Depth of Review. | ||||

| – Formality of Review. | ||||

| – Currency of Review. | ||||

| Reviewers | ||||

| – Expertise. | ||||

| – Independence. |

People Qualifications

The qualifications and experience of the people developing, testing, using, and analyzing M&S can also provide supporting material in ascertaining the risks of accepting the results from an M&S-based analysis.

| 7009 Req’t | Reporting Req’t | Do any of the following exist? (Yes / No) | If yes, what are they? | Rationale for proceeding with the reported information |

| [M&S 37] | People Qualifications | |||

| Developers. | ||||

| Testers. | ||||

| Users (Operators). | ||||

| Analysts. |

M&S Risk

The definition of M&S Risk is “the potential for shortfalls with respect to sufficiently representing a Real World System.” Risk may be induced anywhere in the M&S Life Cycle, from creation to use. All of the additional attributes associated with the reporting of M&S results may bolster or detract from the overall risk associated with accepting the M&S-based results. Besides the aforementioned attributes, the existence and quality of M&S technical reviews and the qualifications of the people involved in M&S development, use, and analysis.NASA M&S Use References:

| Topic | NASA-STD-7009 | NASA-HDBK-7009 |

| M&S Use | Section 4.3 | Sections 5.6 |

| M&S Use Assessment | 5.6.1.2 | |

| M&S Criticality | Appendix D | |

| M&S Caveats | Section 4.3.8 | Section 5.6.3, Table 8 |

| M&S Results Uncertainty | Sections 4.2.7, 4.2.8, 4.3.3, 4.3.4 | Section 5.6.3.1.1 Appendix D.7 |

| M&S Results Credibility Assessment | Appendix E | Section 5.6.3.2.1 Appendix D |

| M&S Risk Assessment Elements | Section 5.6.3.2.2 Table 14 Section 6.4, Table 20 Appendix E, Table 37 | |

| M&S Technical Review | Section 5.6.3.2 Table 11 | |

| M&S People Qualifications | Section 5.6.3.2 Table 12 | |

| M&S Documentation | Section 5.6.3.2 Table 13 | |

| M&S Results Reporting | Section 4.3.8 | Section 5.6.3.2.3 |